Inworld Runtime: The first AI runtime for consumer applications

MOUNTAIN VIEW, Calif., Aug. 13, 2025 (GLOBE NEWSWIRE) — Today Inworld AI released Inworld Runtime – the first AI runtime engineered to scale consumer applications. It lets developers go from prototype to production faster and supports user growth from 10 to 10M users with minimal code changes. By automating AI operations, Inworld Runtime frees up engineering resources for new product development and provides the tools to design and deploy no-code experiments. Inworld’s current partners, major media companies, AAA studios and AI-native startups, are already leveraging Runtime as the foundation of their AI stacks for their next generation of real-time, multi-million-user AI features and experiences.

Built to accelerate Inworld’s internal development, now enabling all consumer builders

The Inworld team always aimed to ensure the benefits of AI reach everyone, everywhere, by enabling the next generation of consumer applications. Coming from Google and DeepMind, the founding team recognized the momentum AI had flowing into business automation and professional-facing applications, while consumer surfaces lagged behind. The company began with AI agents for gaming and media partners including Xbox, Disney, NVIDIA, Niantic, and NBCUniversal – use cases where LLMs excelled at conversational AI.

To accelerate work with these clients, Inworld built Runtime as internal infrastructure to handle the unique demands of consumer AI: maintaining real-time performance at multi-million concurrent user scale, user-specific quality expectations focused on engagement, and costs well under a cent per user per day. As companies from health/fitness, learning, and social applications began approaching Inworld, the team discovered these companies faced the exact same challenges that Runtime was already solving internally and decided to release it publicly.

“We built Runtime because we needed it ourselves. Existing tools couldn’t deliver at the speed and scale our partners required,” said Kylan Gibbs, CEO of Inworld AI. “When we realized every consumer AI company faces these same barriers, we knew we had to open up what we’d built. We’ve watched the industry reach an inflection point. Thousands of builders are hitting the same scaling wall we did, so we hustled over the past year to add capabilities beyond our internal needs to create the universal backend to accelerate the entire consumer AI ecosystem.”

The three factors determining leaders in consumer AI

Through four years of deploying consumer AI applications, Inworld discovered three critical factors that determine success or failure. Excellence in all three is mandatory, and weakness in any one will prevent a consumer AI feature or application from achieving market leadership:

1. Time from prototype to production

While creating an AI demo takes hours, reaching production-readiness typically requires 6+ months of infrastructure and quality improvement work. Teams must handle provider outages, implement fallbacks, manage rate limits, provision and accelerate compute capacity, optimize costs, and ensure consistent quality. In building with category leaders, Inworld saw how most consumer AI projects either make the leap or they stall out and die in the gap between prototype and scalable reality.

2. Resource allocation to new product development

Post-launch, most engineering teams spend over 60% of their time on maintenance tasks: debugging provider changes, managing model updates, handling scale issues, and optimizing costs. This leaves minimal resources for building new features, causing products to stagnate while competitors advance. Inworld experienced this firsthand, as even innovative teams get trapped in maintenance cycles instead of building what users want next.

3. Experimentation velocity

Consumer preferences continuously evolve, but traditional deployment cycles of 2-4 weeks cannot match this pace. Teams need to test dozens of variations, measure real user impact, and scale winners, all without the friction of code deployments and app store approvals. Working with partners across the industry showed Inworld that the fastest learner wins, but existing infrastructure makes rapid iteration nearly impossible.

“We scaled from prototype to 1 million users in 19 days with over 20× cost reduction.” – Fai, Status CEO

Inworld Runtime’s technical design

Inworld Runtime delivers these capabilities through multiple innovations, including:

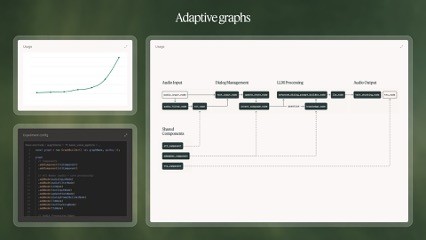

1. Adaptive Graphs

A C++-based graph execution system that solves scaling cross-platform limitations faced by most AI frameworks, with SDKs for Node.js, Python and others. Developers compose applications using pre-optimized nodes as building blocks (with APIs from top providers for LLM, TTS, STT, knowledge, memory and much more) that handle low-level integration work and automatically optimize data streams between components. The same graph seamlessly scales from 10 test users to 10 million concurrent users with minimal code changes and managed endpoints. With vibe-coding friendly interfaces this directly enables the leap from prototype to production in days, not months.

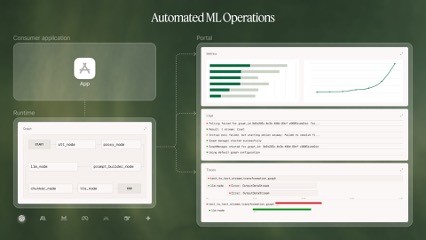

2. Automated MLOps

Beyond basic operations, Runtime provides self-contained infrastructure automation with integrated telemetry capturing logs, traces, and metrics across every interaction. Actionable insights, such as identifying bugs, user patterns, and optimization opportunities, are surfaced through the Portal, Inworld’s observability and experiment management platform. Runtime performs automatic failover between providers, manages capacity across models, and handles rate limiting intelligently. It also supports custom on-premise deployments with optimized model hosting for enterprises. As applications scale, Inworld provides access to all necessary cloud infrastructure to train, tune, and host custom models that break the cost-quality frontier of default models.

3. Live Experiments

One-click to deploy or scale experiments. Configuration separated from code enables instant A/B tests without deployment friction. Runtime can automatically run hundreds of experiments simultaneously by defining variants via SDK and managing tests through the Portal, testing different models, prompts, graph configurations, and logic flows. Changes deploy in seconds with automatic impact measurement on user metrics.

Proven results from early adopters of Inworld Runtime

Runtime deployments demonstrate consistent technical achievements:

- Inworld’s largest partners (major IP owners, media companies and AAA studios) are already leveraging Runtime as the foundation of their AI stacks

- Wishroll scaled from prototype to 1 million users in 19 days with over 95% cost reduction

- Little Umbrella is able to ship new AI games while they use Inworld to reduce update and maintenance effort for existing titles

- Streamlabs built a multimodal real-time streaming assistant with features that were unfeasible even six months ago

- Bible Chat upgraded and scaled their voice features while reducing voice costs by 85%

- Nanobit delivers personalized AI narratives to millions at sustainable unit economics

Availability and Pricing

Developers can get started immediately by downloading Runtime SDKs at inworld.ai/runtime, with comprehensive documentation and migration guides. Runtime works natively with code assistants like Cursor, Claude Code, Google CLI, Windsurf, and Zencoder. Start with your own project or use Inworld’s templates and demo apps as inspiration. Runtime deploys flexibly, in client applications, on any cloud provider’s servers, or through custom on-premise installations with Inworld-managed model hosting. Once in production, the Portal can be used for observability and rapid experimentation.

Runtime pricing is entirely usage-based with no upfront costs. Developers can experiment with all models and capabilities and only pay for what scales successfully, ensuring alignment with consumer application economics where costs must remain sustainable as usage grows. With access to state-of-the-art models from Anthropic, Google, Mistral, and OpenAI, developers have maximum choice to easily test and select the optimal model for their use case. Runtime also provides access to top open-source models like Deepseek, Llama, and Qwen through lightning-fast providers Groq, Tenstorrent and Fireworks AI. Developers with existing Microsoft or Google relationships can utilize their cloud commitments to access Runtime through the Azure Marketplace and Google Cloud Marketplace.

About Inworld AI

Founded in 2021, Inworld develops the AI Runtime for consumer applications, enabling scaled applications that grow into user needs and organically evolve through experience. The company has raised over $120M from investors including Lightspeed Venture Partners, Kleiner Perkins, Section32, Founders Fund, Stanford University, and Microsoft’s M12.

Inworld will host the inaugural Consumer AI Summit in Spring 2026 in San Francisco, bringing together technical leaders building and scaling the next generation of consumer AI applications.

Photos accompanying this announcement are available at:

https://www.globenewswire.com/NewsRoom/AttachmentNg/bea95113-29bd-407a-9a2c-deb888bac37b

https://www.globenewswire.com/NewsRoom/AttachmentNg/2662a56e-0a16-4fce-8c89-074c647bd73e

https://www.globenewswire.com/NewsRoom/AttachmentNg/6e71f922-c43a-47f4-9426-660b12f86f70

CONTACT: Contact: Clement | Peterson for Inworld AI bret@clementpeterson.com