Harnessing Wikidata’s Open Knowledge Graph For Enterprise Innovation

By Dan Shick, Software Communications Manager, Wikimedia Deutschland

Enterprise IT teams are under growing pressure to reduce data costs, streamline integration across systems, and support AI and analytics at scale. But dependable, well-structured data remains difficult to access. Proprietary sources are expensive and restrictive. Internal data is often inconsistent. Connecting systems across regions and departments can become a full-time challenge.

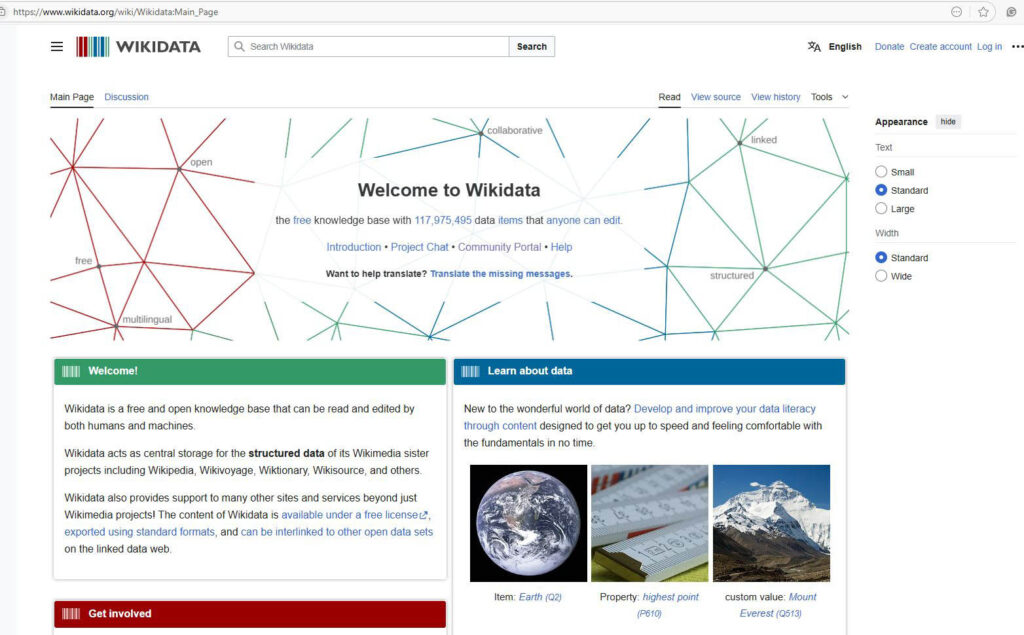

Wikidata offers a different approach. This article explores how an open, structured knowledge graph can help enterprise teams work smarter with data. Whether the goal is to cut licensing costs, improve metadata consistency, or build AI-ready infrastructure, Wikidata can play a valuable supporting role.

Wikidata employs the Resource Description Framework (RDF) model, and it’s compatible with standard querying tools like SPARQL. It provides REST API access and contains more than 1.3 billion structured facts, contributed and maintained by a global community. The platform receives close to 500,000 updates each day, making it one of the most active and current open data sources available.

The system has an unprecedented scale of connectedness with its nearly 10,000 external identifiers, acting as bridges between Wikidata entries and other databases, classification schemes, and platforms. These bridges carry heavy traffic, as Wikidata has over 250 million statements that make use of these external identifiers to give easy access to additional data. That’s over 250 million individual connections between Wikidata entries and entries in other websites, archives, etc, which makes it easier to link and unify data across existing enterprise systems, improving tasks like entity resolution, content tagging, and data deduplication.

Wikidata is already in use in AI and computation workflows. LangChain uses it to support factual grounding in generative AI models. Wolfram Alpha integrates it into structured queries for knowledge-based reasoning. These examples show how Wikidata can strengthen the accuracy and traceability of advanced systems, especially when used alongside internal data.

Multilingual support is built in. Wikidata provides labels, aliases, and descriptions in hundreds of languages, which allows enterprise teams to standardize how data is represented across markets and regions. For teams supporting multilingual catalogs, global compliance reporting, or international customer platforms, consistency like this is a significant practical advantage.

Several projects also demonstrate how Wikidata supports real-world information systems:

- Cividata creates a centralized directory of nonprofits by pulling together structured data. Corporate social responsibility teams could apply similar techniques to track NGO partnerships or regional outreach.

- Aletheiafact verifies political statements in Brazil using Wikidata entries. Media platforms and brand teams can use comparable methods to validate user-generated content or flag potential misinformation.

- Sangkalak provides access to Bengali literature on Wikisource, which is a sister project to Wikidata. Educational publishers or regional content managers could use this approach to organize and surface local content.

- Inventaire is a distributed book-sharing platform across more than 50 countries. Digital libraries and e-learning platforms could adopt this model to manage decentralized content assets.

- Govdirectory maps public institutions in 37 countries into a searchable interface to make it easier for citizens to engage with their government. Policy, legal, and compliance teams can apply similar structures to monitor regulatory frameworks.

- Paulina helps users explore public-domain books using Wikidata’s data. The same method can support personalized discovery features in education and publishing systems.

Wikidata is built on MediaWiki, the same software infrastructure that powers Wikipedia. For organizations with more specific data needs, the underlying software, Wikibase, can be used to create custom knowledge graphs that still interoperate with Wikidata. This makes it easier to share, connect, or migrate data between systems over time.

In the AI space, Wikidata’s role is expanding. The Embedding Project, an initiative led by Wikimedia Deutschland, Jina.AI, and DataStax, converts Wikidata entries into machine-readable vector formats. These embeddings can be used to improve semantic search, enable context-aware chatbot responses, and reduce hallucinations in LLMs. For teams exploring large language models or knowledge retrieval, this creates a new layer of flexibility and control.

The open and collaborative nature of Wikidata is another of its long-term benefits. A global contributor base helps keep the dataset current, diverse, and representative. Events like WikidataCon support this growth by coordinating improvements across sectors and geographies. This is especially useful for teams working in markets where proprietary data is limited or outdated.

Wikidata isn’t meant to replace commercial datasets, but it can reduce over-reliance on them. It can improve workflows for metadata, content classification, compliance, and AI readiness. It can also fill important gaps in multilingual or market-specific information.

IT and data leaders can start experimenting with Wikidata by using the REST API or SPARQL endpoint to test practical use cases. Common starting points include named entity recognition, content categorization, and entity matching across systems. Integration doesn’t require overhauling existing infrastructure – it can begin with lightweight pilots and scale gradually.

For enterprises rethinking how to source and structure data, Wikidata is worth serious consideration. It offers flexibility, openness, and interoperability at a time when all three are in short supply.

About the author

Dan Shick, Software Communications Manager, Wikimedia Deutschland

Dan Shick is a seasoned professional with extensive experience in software communications and technical writing, currently serving as Software Communications Manager at Wikimedia Deutschland since April 2020. His responsibilities include managing external communications for the Software Engineering department and enhancing documentation for Wikidata, MediaWiki and Wikibase. Previously, Dan worked as a freelance translator, editor, and technical writer, as well as holding several engineering roles at organizations such as audibene GmbH and Blackboard. Dan has a strong educational background in linguistics and foreign languages from the University of California, Berkeley, and Santa Rosa Junior College.