Why Context Will Be a Key 2026 AI Enabler

By Dominik Tomicevic, Founder and CEO of Memgraph

Large language models (LLMs) succeed or fail based on the quality of their data, yet many current approaches fall short when it comes to handling incomplete or flawed information. Enterprise architect Dominik Tomicevic suggests graph technology may be the solution

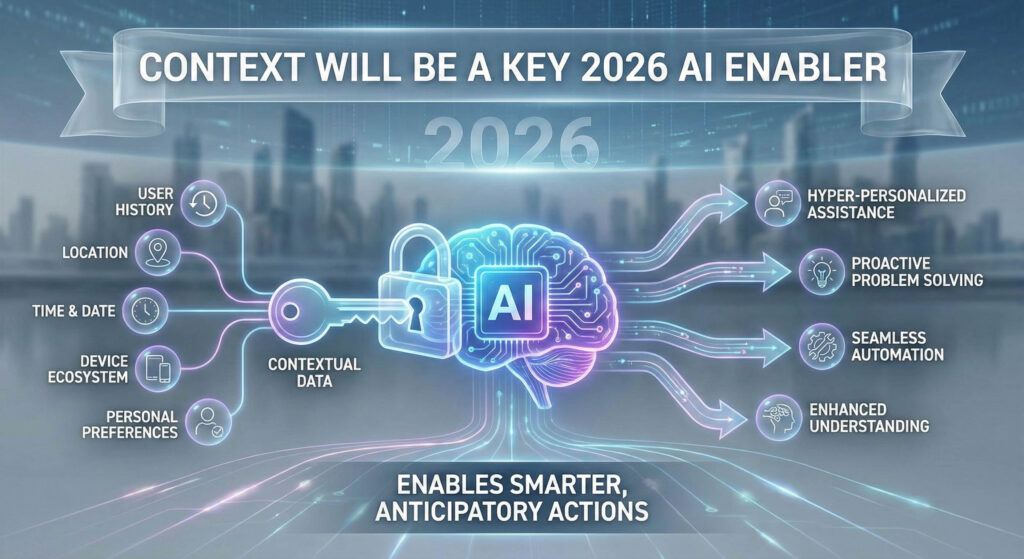

We all want our AI to be genuinely useful, and at first, prompt engineering seemed like the way to achieve that. But it’s become clear that prompts alone aren’t enough. Developer focus is now rapidly shifting toward context, not just prompt design. Why?

According to Gartner, context adds the depth that can transform AI ‘from a black-and-white comic strip into a fully immersive 3D world’. Graph-based approaches, particularly GraphRAG, are emerging as the ideal way to make context engineering work effectively.

Over the course of a busy 2025, we’ve learned that for an LLM to be truly useful, it must be taught—securely and within your corporate firewall—about your processes, data, and domain. Generic LLMs simply aren’t familiar enough with your specific problem space; they need structured guidance and reinforcement.

The effort is well worth it. Once your in-house AI is contextually extended, it can reason effectively and deliver meaningful, actionable answers to business users.

We loved prompts…

At first, prompt engineering seemed like the solution. Surely, carefully crafting instructions to coax better results from generative AI models would yield increasingly accurate answers. But in the field, this approach proved too broad an approach. LLMs rely on what they know in order to retrieve what they’ve learned, meaning they can only access information included in their training or supplied as context.

As a result, prompt engineering alone can’t generate company-specific insights, such as internal financial forecasts, because that data simply isn’t in the model. Without the right context, the model will hallucinate.

In other words, you can create the most elegant prompts, but if the model doesn’t know where to look or how to reason across the problem space, you won’t get much further. AI systems work best within tight constraints. Even well-funded teams must be disciplined with their use of expensive tokens. They can’t search endlessly until they find a satisfying answer, or they risk a British Museum exponential search.

The missing link is sharper, deliberately engineered definitions of context. Unlike prompt engineering, which provides only limited situational guidance, modern context engineering grounds model outputs in governed, up-to-date, and relevant data. It constrains and focuses what the model knows, ensures the right evidence is surfaced, and enables the LLM to reason effectively within a defined search space.

The missing piece of the jigsaw is graph technology

What we know now is that for AI to be truly effective, it depends on context. The next step is how well that context can be captured, structured, and retrieved.

There are multiple techniques for doing this, and all must perform at a high standard. While many developers argue that context engineering can be achieved with conventional Retrieval-Augmented Generation (RAG) alone, our experience suggests otherwise. In practice, far stronger results are achieved when unstructured RAG is delivered through a structured knowledge-graph layer, as in GraphRAG.

GraphRAG, developed last year by Microsoft’s AI Lab, is rapidly emerging as a powerful tool for narrowing search spaces and tackling a key challenge in modern AI: preventing LLMs from being overwhelmed by context. Its strength lies in iterative, selective summarization. Instead of flooding a model with massive token windows, GraphRAG continuously refines and delivers only the most relevant, preprocessed context—keeping responses both focused and accurate.

To be precise, the advantage comes not just from retrieval, but from the knowledge graph itself. Graph-based approaches are widely recognized in the developer community for their ability to capture, in granular detail, the relationships between customers, products, policies, events, and other entities. This enables systems to pinpoint exactly the slice of data needed for any given task.

Knowledge graphs naturally expose missing links, outdated information, and inconsistencies, allowing AI to reason across silos and ensuring that stakeholder knowledge flows coherently throughout the system.

Swerving the context rot cul-de-sac

Common sense would suggest that the more a model consumes, the smarter it should be. But LLMs are not human, and their existing architectures and context windows have limits. The reality is that the larger the dataset, the higher risk of mistakes or misinterpretation, not of greater insight.

Multiple studies confirm this: beyond a certain context size, model accuracy tends to decline (see, for example, this August 2024 Databricks paper). Recent research from Chroma has reinforced these findings and coined the term context rot to describe the phenomenon.

The takeaway is clear: providing an LLM with the minimum relevant information for a task produces better results, while human-in-the-loop curation ensures the right amount of context is included. The gold standard for hallucination mitigation involves structuring, filtering, summarizing, and continuously testing the context so that only essential information enters the model’s working memory—leveraging knowledge graphs to ensure the model always works with the most relevant, structured information.

Primed for Agentic success?

As we enter a phase where large-scale deployment of Agentic AI is increasingly expected, ensuring enterprise-level AI performance is critical. The evidence is clear that this performance has to be underwritten by context, not just prompts.

I also think success in the field reveals that it’s the magic combination of Knowledge Graphs, GraphRAG, and some careful context management that is the best foundation for scalable AI.

The author is CEO and Co-founder of Memgraph, a high-performance, in-memory graph database that serves as a real-time context engine for AI applications, powering enterprise solutions with richer context, sub-millisecond query performance, and explainable results that developers can trust.